Man Jailed Due to Faulty AI Identification Free After 17 Months

St. Louis Police AI identification program lead to an innocent Black Man jailed for 17 months

A small but growing number of police departments are adopting software products that use artificial intelligence (AI) to draft police reports for officers. Police reports play an important role in criminal investigations and prosecutions, and introducing novel AI language-generating technology into the criminal justice system raises significant civil liberties and civil rights concerns.

While there are certainly benefits to using AI-powered technologies to create more efficient work processes, the technology, as anyone who has experimented with Large Language Models (LLMs) like ChatGPT knows, is quirky and unreliable and prone to making up facts. AI is also biased. Because LLMs are trained on something close to the entire Internet, they inevitably absorb the racism, sexism, and other biases that permeate our culture. Even if an AI program doesn’t make explicit errors or exhibit obvious biases, it could still spin things in subtle ways that an officer and other judicial officials don't notice.

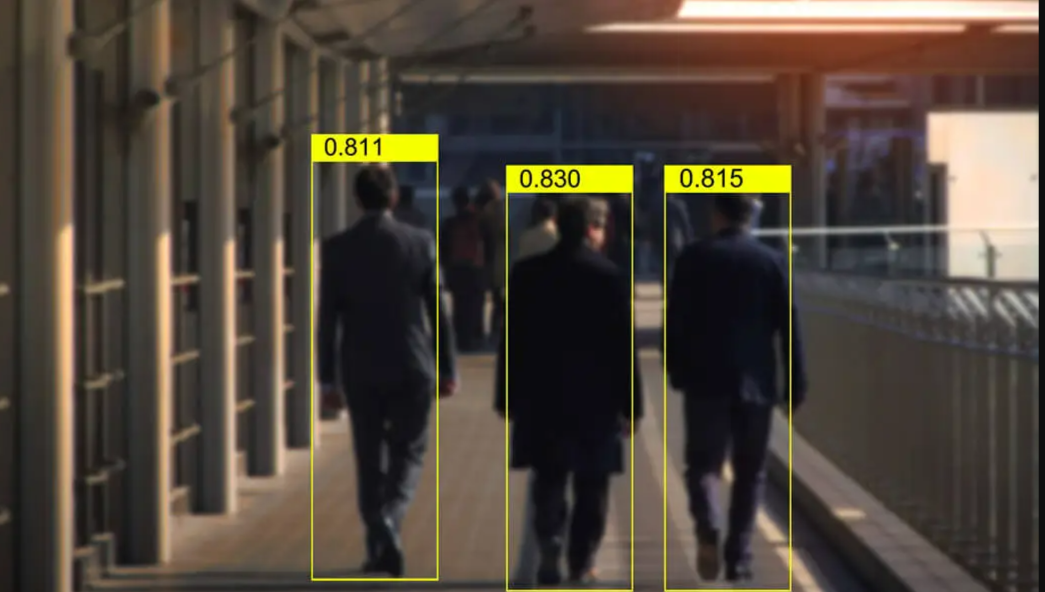

In St. Louis County (Missouri), Chris Gatlin spent 17 months in jail for a crime that an artificial intelligence program said he committed, only to be freed after county prosecutors learned there was no real evidence when bodycam footage finally surfaced. A grainy surveillance photo of an assault suspect on the Metrolink transportation system led a computer facial recognition program to identify Gatlin.

“That knock that came on the door blew me away because I wasn’t expecting it to be the police,” Gatlin recounted in an interview with FOX2 News about the moment agents invaded his home. “It’s really not right, to get accused of something you know you didn’t do," he added.

Even the assault victim didn’t initially think Gatlin was the right guy. During the photo lineup, the victim warned police about his memory during the photo lineup, telling the officer he was in and out of consciousness during the assault. The victim initially picked two different suspects, neither of which was Gatlin.

As correctional and justice departments increasingly deploy AI surveillance tools without oversight or accountability, they strengthen rather than disrupt the foundational systems of racial targeting and profiling. While Gatlin has secured his physical freedom, the apparatus responsible faces no consequences. Instead, authorities, by and large, actively defend expanding these technologies of racial control, deliberately ignoring how these tools perpetuate centuries of anti-Black violence.

For more information, you can read and download a December 2024 American Civil Liberties Union report, "Police Departments' Use of AI to Draft Police Reports."